As you’ve no doubt heard, Washington D.C. is angling for a takeover of the . . . U.S. telecom industry?!

That’s right: broadband, routers, switches, data centers, software apps, Web video, mobile phones, the Internet. As if its agenda weren’t full enough, the government is preparing a dramatic centralization of authority over our healthiest, most dynamic, high-growth industry.

Two weeks ago, FCC chairman Julius Genachowski proposed new “net neutrality” regulations, which he will detail on October 22. Then on Friday, Yochai Benkler of Harvard’s Berkman Center published an FCC-commissioned report on international broadband comparisons. The voluminous survey serves up data from around the world on broadband penetration rates, speeds, and prices. But the real purpose of the report is to make a single point: foreign “open access” broadband regulation, good; American broadband competition, bad. These two tracks — “net neutrality” and “open access,” combined with a review of the U.S. wireless industry and other investigations — lead straight to an unprecedented government intrusion of America’s vibrant Internet industry.

Benkler and his team of investigators can be commended for the effort that went into what was no doubt a substantial undertaking. The report, however,

- misses all kinds of important distinctions among national broadband markets, histories, and evolutions;

- uses lots of suspect data;

- underplays caveats and ignores some important statistical problems;

- focuses too much on some metrics, not enough on others;

- completely bungles America’s own broadband policy history; and

- draws broad and overly-certain policy conclusions about a still-young, dynamic, complex Internet ecosystem.

The gaping, jaw-dropping irony of the report was its failure even to mention the chief outcome of America’s previous open-access regime: the telecom/tech crash of 2000-02. We tried this before. And it didn’t work! The Great Telecom Crash of 2000-02 was the equivalent for that industry what the Great Panic of 2008 was to the financial industry. A deeply painful and historic plunge. In the case of the Great Telecom Crash, U.S. tech and telecom companies lost some $3 trillion in market value and one million jobs. The harsh open access policies (mandated network sharing, price controls) that Benkler lauds in his new report were a main culprit. But in Benkler’s 231-page report on open access policies, there is no mention of the Great Crash.

Although the report is subtitled “A review of broadband Internet transitions and policy from around the world” (emphasis added), Benkler does not correctly review the most pronounced and obvious transition in the very nation for whom he would now radically remake policy. Benkler writes that the U.S. successfully tried “open access” in the late-1990s, but then

the FCC decided to abandon this mode of regulation for broadband in a series of decisions in 2001 and 2002. Open access has been largely treated as a closed issue in U.S. policy debates ever since. [p. 11]

This is false.

In fact, open access and other heavy-handed regulations were rolled back in a series of federal actions from 2003 to 2005 (e.g., ’03 triennial review, ’05 Brand-X Supreme Court decision). And then between 2006 and 2009, a number of states adopted long-overdue reforms of decades-old telecom laws written for the pre-Internet age. (The Supreme Court even decided a DSL “line-sharing” case as late as February 2009.) If we had in fact ended open access regulation in 2001, as Benkler claims, perhaps the telecom crash would have been less severe.

Benkler would like to show that America’s “decline” in broadband corresponds to its open-access roll-back. But the chronology doesn’t fit. In fact, American broadband took a very large hit in the open-access era. In 2002, at the end of this open access experiment, George Gilder estimated that South Korea — first to deploy fiber-to-the-X and 3G wireless — enjoyed some 40 times America’s per capita bandwidth. Our international “rank” may have appeared better at the time, but we were far worse off compared to our broadband “potential.” Because the U.S. invented most of the Internet technologies and applications, we were bound to lead at the outset, in the mid- and late-1990s. Bad policy stalled our rise for a time, and other nations moved forward. But today we are back on track. The U.S. broadband trajectory is steep. In the last few years, U.S. broadband has swiftly recovered and begun closing, or eliminating, the international gap. The depth of the “gap” then (2002) was far more pronounced — and relevant — than the “ranking differential” now (2009). Our wired and wireless broadband capabilities, services, and innovations now rival or exceed many of the world’s best.

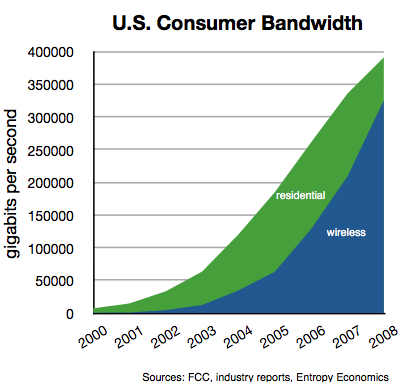

In a June 2009 report, I attempted to quantify the rise of American broadband. I found that by the end of 2008, U.S. consumers enjoyed 717 terabits per second of communications power, or bandwidth, equal to 2.4 megabits per second on a per capita basis. Today, bandwidth per capita approaches 3 megabits per second, an almost 23-fold increase since the dawn of deregulation in 2003.

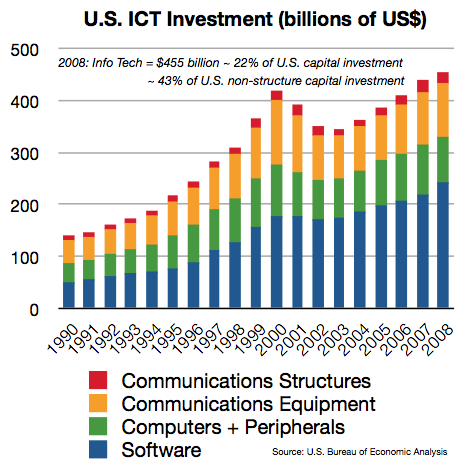

This rise was possible because U.S. info-tech investment in 2008, to pick the most recent year, was $455 billion, or 22% of all America’s capital investment. Communications service providers alone invested some $65 billion. And this is real investment. As you can see in the graph below, there was another peak in 2000. But much of that era’s investment was due to (1) Y2K preparations; (2) the concentrated build-out of long-haul optical networks (too much capacity at the time, but useful over the, pun intended, “long haul”); and (3) wasteful duplication by communications service providers employing regulatory arbitrage strategies designed to exploit the very type of open access policies Benkler urges again. This new U.S. surge in info-tech investment looks to be far more sustainable, based on real businesses and sound incentives, not some centralized policy to pick “winners and losers.”

A number of other problems plague the Benkler report.

As George Ou explains, the international data on broadband “speed” and prices are highly suspect. Benkler repeats findings from the oft cited OECD studies that show the U.S. is 15th in the world in broadband penetration. Benkler also supplements previous reports with apparently corroborating evidence of his own. But Scott Wallsten (now at the FCC) adjusted the OECD data to correct for household size and other factors and found the U.S. is actually more like 8th or 10th in broadband penetration. South Korea, the consensus global broadband leader, is just 6th in the broadband penetration metric. The U.S. and most other OECD nations, moreover, will reach broadband saturation in the next few years. Average download speeds in the U.S., Wallsten found, were in line with the mid-tier of OECD nations, behind Korea, Japan, Sweden, and the Netherlands. But Ou thinks there is yet more evidence to question the quality of the international data and thus the argument the U.S. is even this small distance behind.

US broadband providers deliver the closest actual throughput to what is being advertised, and it is well above 90% of the advertised rate. When we consider the fact that advertised performance is often quoting raw signaling speeds (sync rate) which include a typical 10% protocol overhead and the measured speeds indicate payload throughput, US broadband providers are delivering almost exactly what they are advertising. By comparison, consumers in Japan are the least satisfied with their broadband service despite the fact that they have the fastest broadband service. This dissatisfaction is due to the fact that Japanese broadband customers get far less than what was advertised to them. The Ofcom data is further backed up by Akamai data which provides the largest sample of real-world performance data in the world. When real-world speeds are compared, the difference is nowhere near the difference in advertised speeds.

Cable broadband companies in Japan don’t really have more capacity than their counter parts in the United States yet they offer “160 Mbps” service. While that sounds even more impressive than the Fiber to the Home (FTTH) offerings in Japan or the U.S., it’s important to understand that the total capacity of the network may only be 160 or 320 Mbps shared between an entire neighborhood with 150 subscribers. Even if only 30 of those customers in a neighborhood takes up the 160 Mbps service, just 2 of those customers can hog up all the capacity on the network.

While Verizon may charge more per Mbps for FTTH service than their Japanese counter parts, they aren’t nearly as oversubscribed and they usually deliver advertised speeds or even slightly higher than advertised speeds. More to the point, Verizon’s 50 Mbps service is a lot closer to the 100 Mbps services they offer in Japan than the advertised speeds suggest. Oversubscription is a very important factor and it is the reason businesses will pay 20 times more per Mbps for dedicated DS3 circuits with no oversubscription on the access link. If Verizon were willing to oversell and exaggerate advertised bandwidth as much as FTTH operators in Japan, they could easily provide “100″ Mbps or even “1000″ mbps FiOS service over their existing GPON infrastructure. The risk in doing this is that Verizon might lose some customer satisfaction and trust when the actual performance (while higher than current levels) don’t live up to the advertised speeds.

Even absent these important corrections and nuances, we should understand that in today’s world — with today’s computers, today’s applications, today’s media content, and, importantly, the rest of today’s Internet infrastructure, from data farms and content delivery networks to peering points and all the latency-inducing hops, buffers, and queues in between — there is no appreciable difference for even a high-end consumer between 100 Mbps and 50 Mbps. A Verizon FiOS customer with 50 Mbps thus does not suffer a miserable “half” the capability of a Korean with “100 Mbps” service and may in fact enjoy better overall service. But some of the metrics in the Benkler report suggest the “100 Mbps” user is 100% better off.

Another important market distinction: The U.S. has by far largest cable TV presence of any nation reviewed. Cable has a larger broadband share than DSL+fiber, and has since the late 1990s. No nation has nearly the divided market between two very substantial technology/service platforms. This unique environment makes many of the Benkler comparisons less relevant and the policy points far less salient. In a market where the incumbent telecom provider is regulated and is subject to far less intermodal competition (as from the ubiquitous U.S. cable MSOs), the regulated company earns a range of guaranteed returns on various products and may also be ordered to deploy certain network technologies by the regulator.

In France, just three DSL operators comprise 97%-98% of all DSL subscribers. DSL subscribers, moreover, are nearly all of France’s broadband subscribers. So how the open access regulations Benkler lauds lead to a better outcome is unclear. Presumably, the key virtue of open access is “more competition” at the service, or ISP, layer of the network. But if there’s not more competition, what is the open access mechanism that leads to better outcomes? What is the link between “open access” policies and either investment and/or prices? And thus the link between policy and “penetration” or “speed” or “quality”? It is easy to see how open access can by force drive down prices for existing products and technologies. But how open access incentivizes investment in next generation networks is never explained. Several steps are missing from Benkler’s analysis.

Let’s take a deep breath before we let Washington topple another industry — a healthy, growing one at that.

— Bret Swanson